Overview

This research is part of the SeNSE Lab at Northwestern University, with the aim to embody the sensory-motor loop of seals. The objective is to understand and emulate the cognitive processes of seal driven by its whiskers.

Thus, I developed an Underwater ROV system with seal-inspired whiskers’ array mounting, to emulate the perception information observed by Seals in real life and develop decision making model based on that information. The intent is to understand signal vibrations of each tag caused by the water ripples hitting the soft-robotic wisker system and correlate it to the physical movement of ROV.

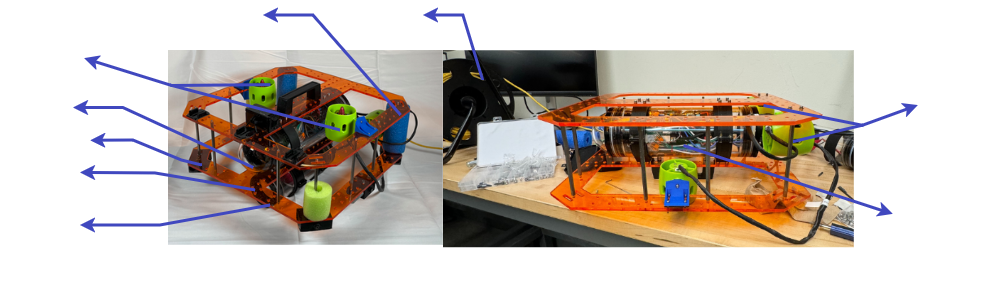

Hardware Setup

Real

Robotic Wisker Array

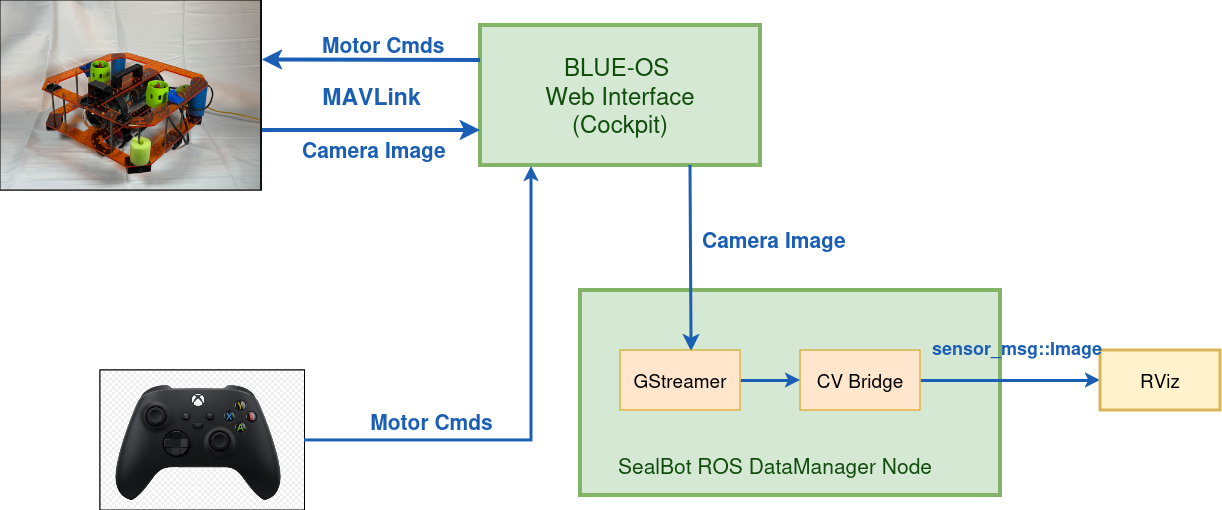

Perception Stack

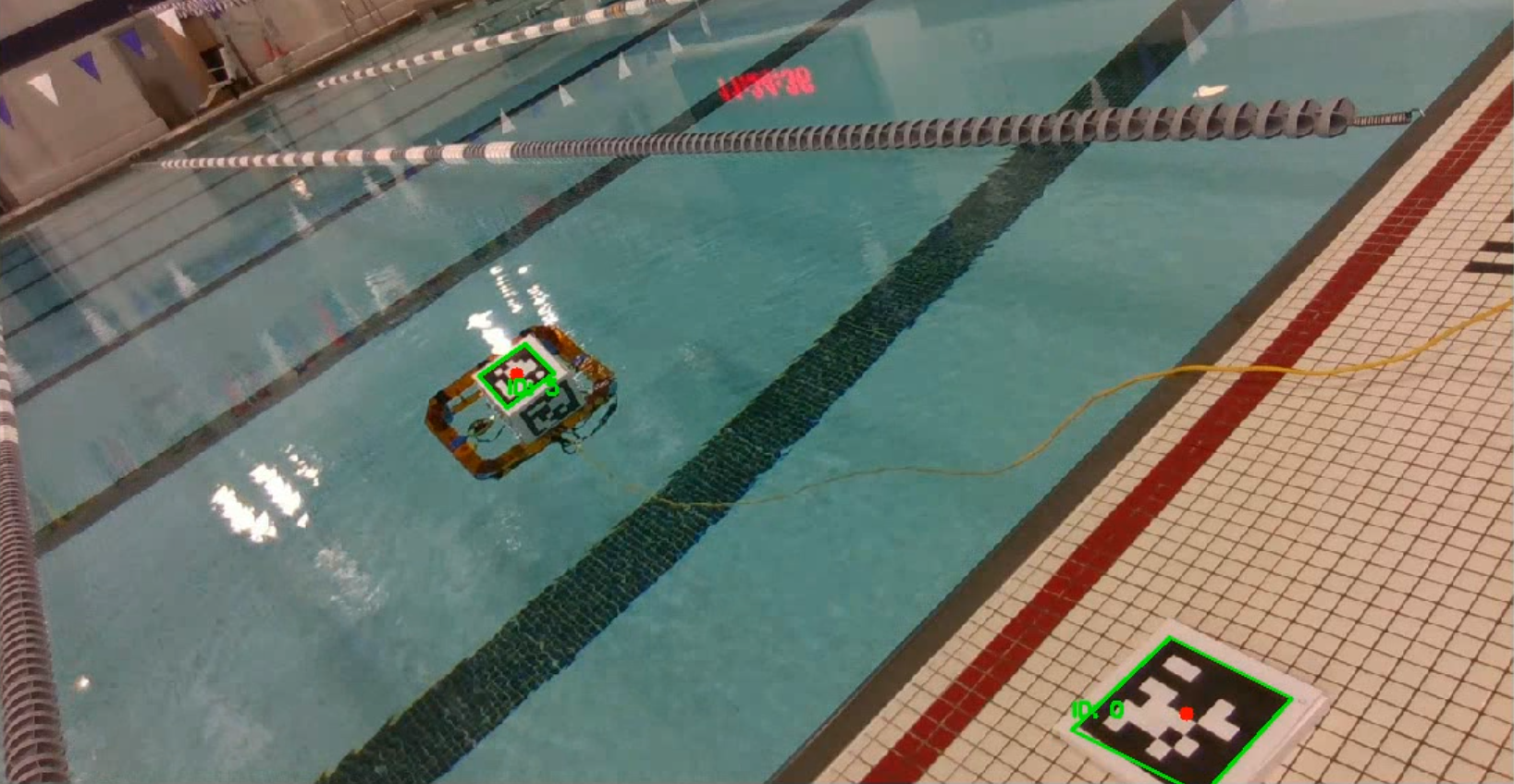

Ground-Truth

There are two main parts of the perception subsection. First is the ground truth extracted from the External April Tag system mounted on the ROV using a external Real-Sense RGBD. This is the 6-Dimentional [x,y,z,roll,pitch,yaw] trajectory information of the Rover’s movement in physical world, with respect to a static tag outside the pool.

Observed by Ext RealSense D435i

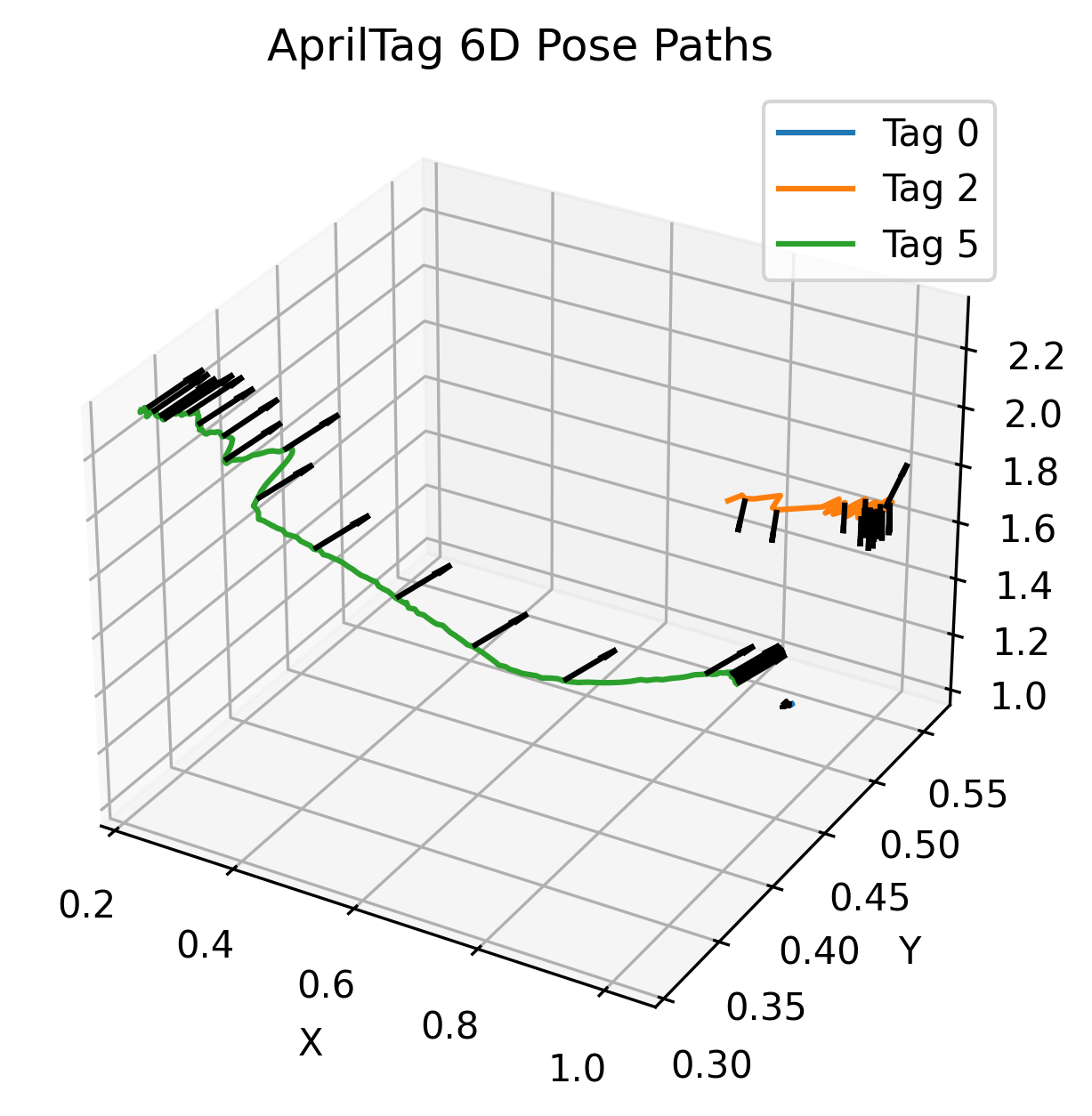

6D Pose Plot of Individual Tags

The above plot is the 6-D pose trajectory, with the black lines determining the orientation of the tag, at an discrete time interval. This plot is for each of the on-board 5Tags, so the Rover pose is known at all times. And the orange trajectory is of the static tag out of pool, to calculate relative pose.

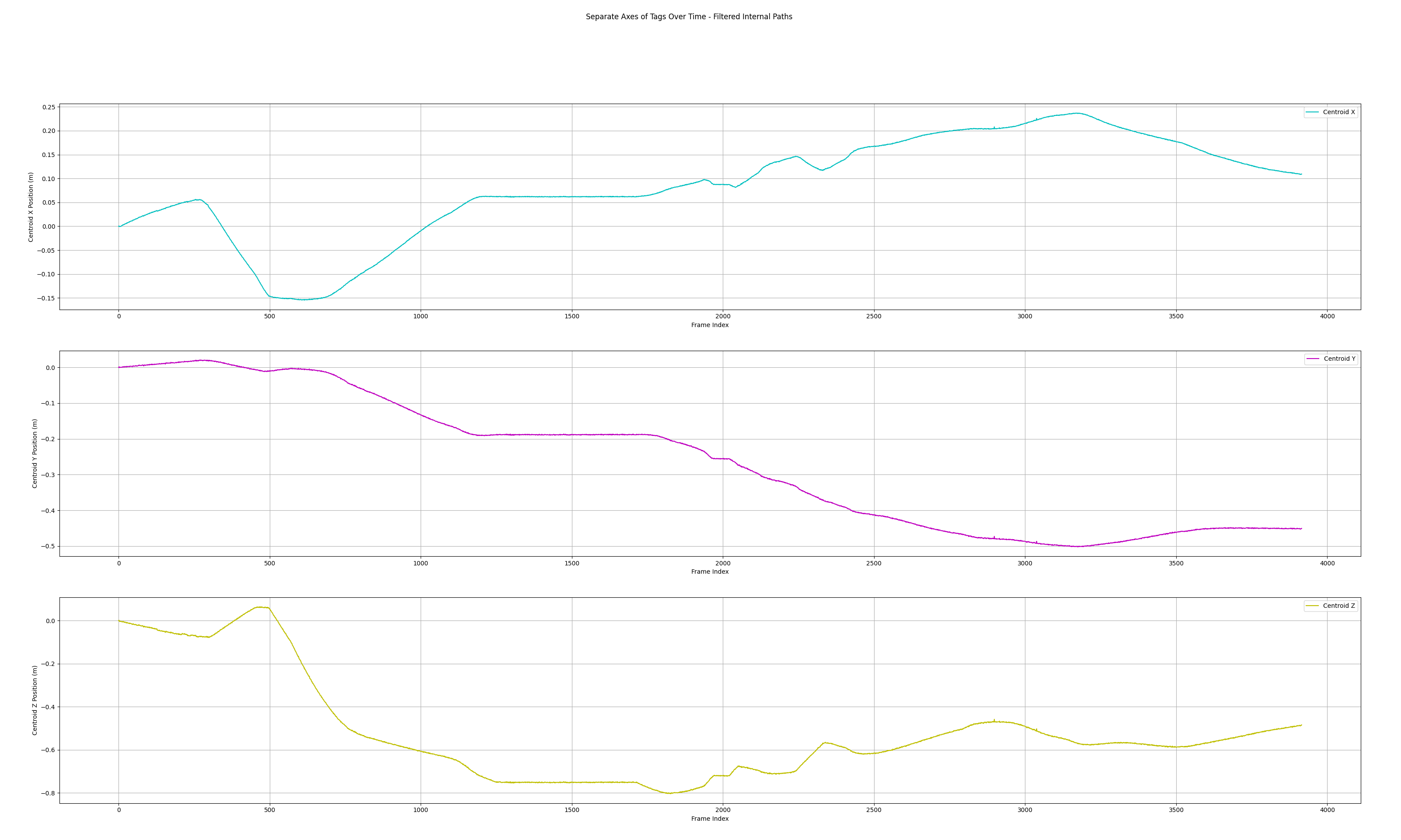

Tag Fusion Demo

Fused Centroid individual axis (x,y,z) trajectory Plot

Wisker Detection

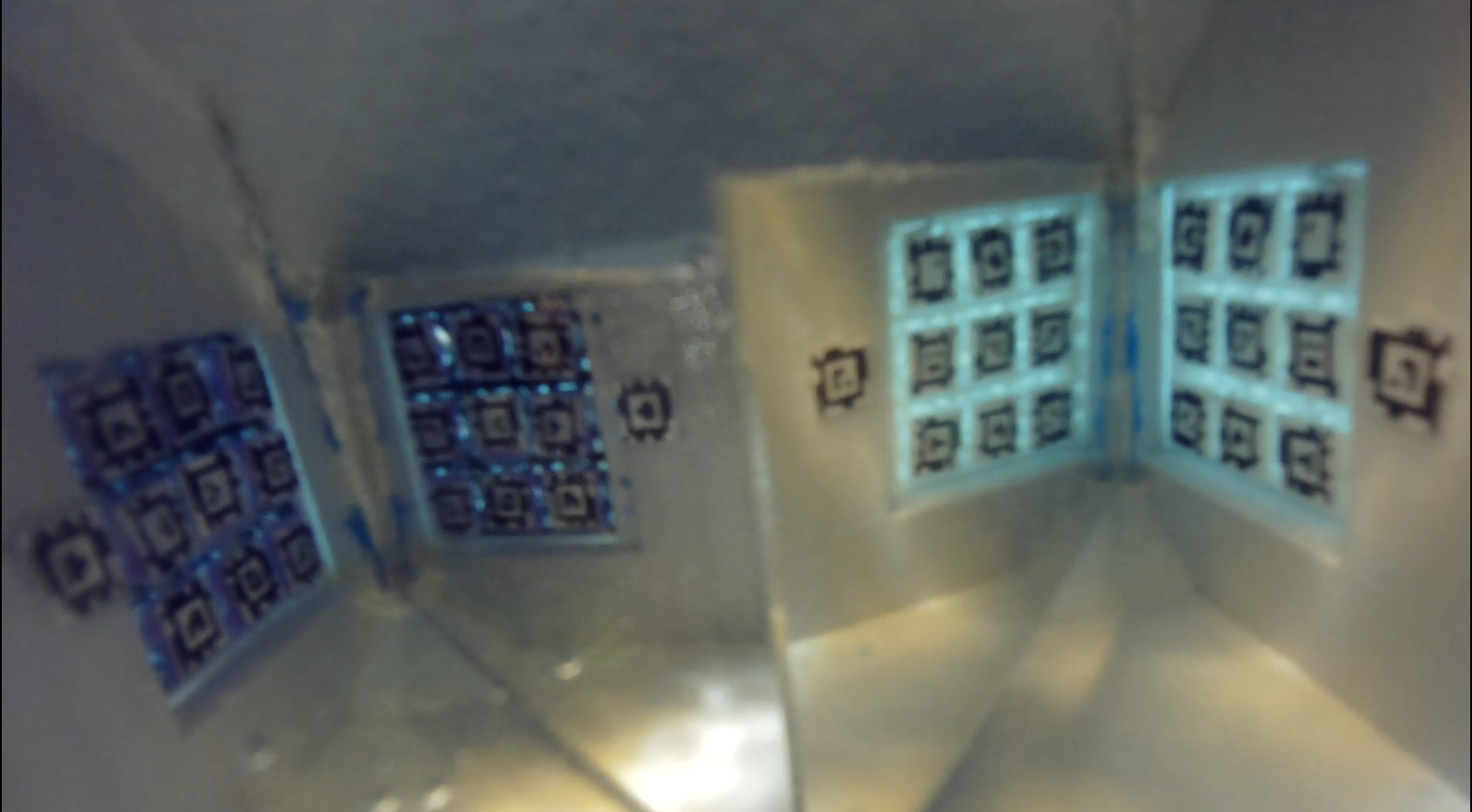

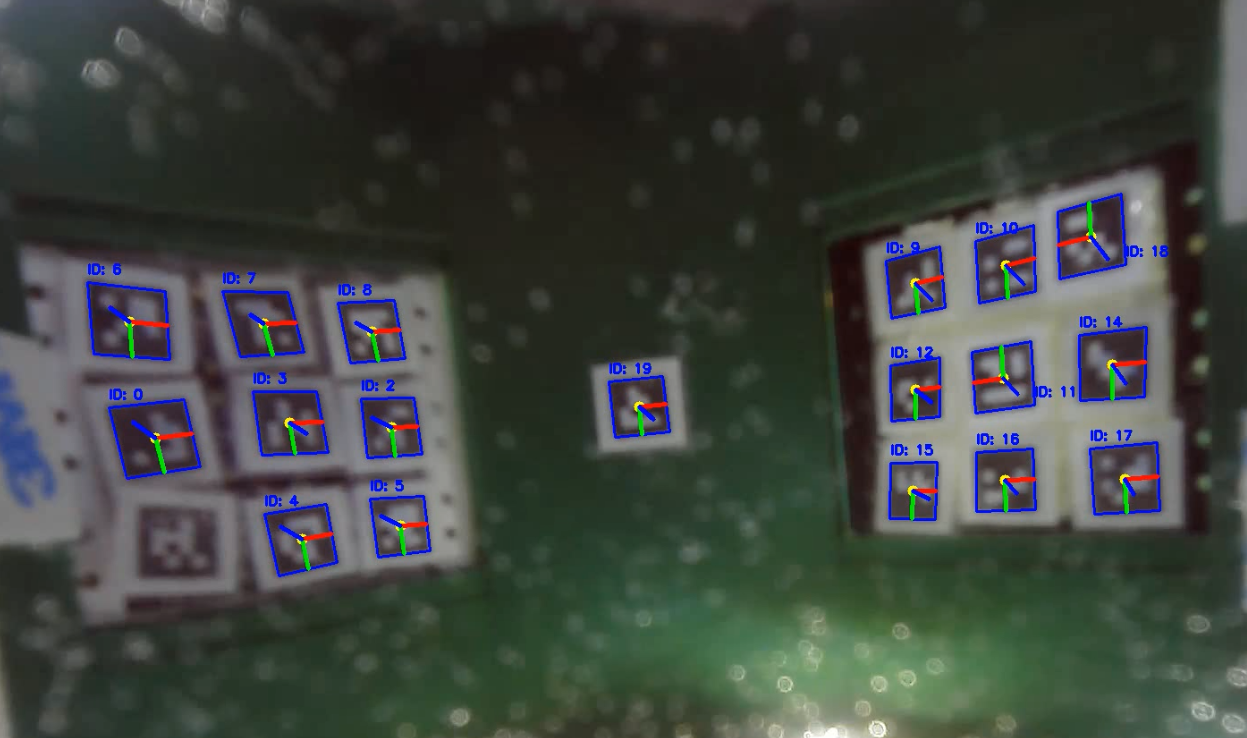

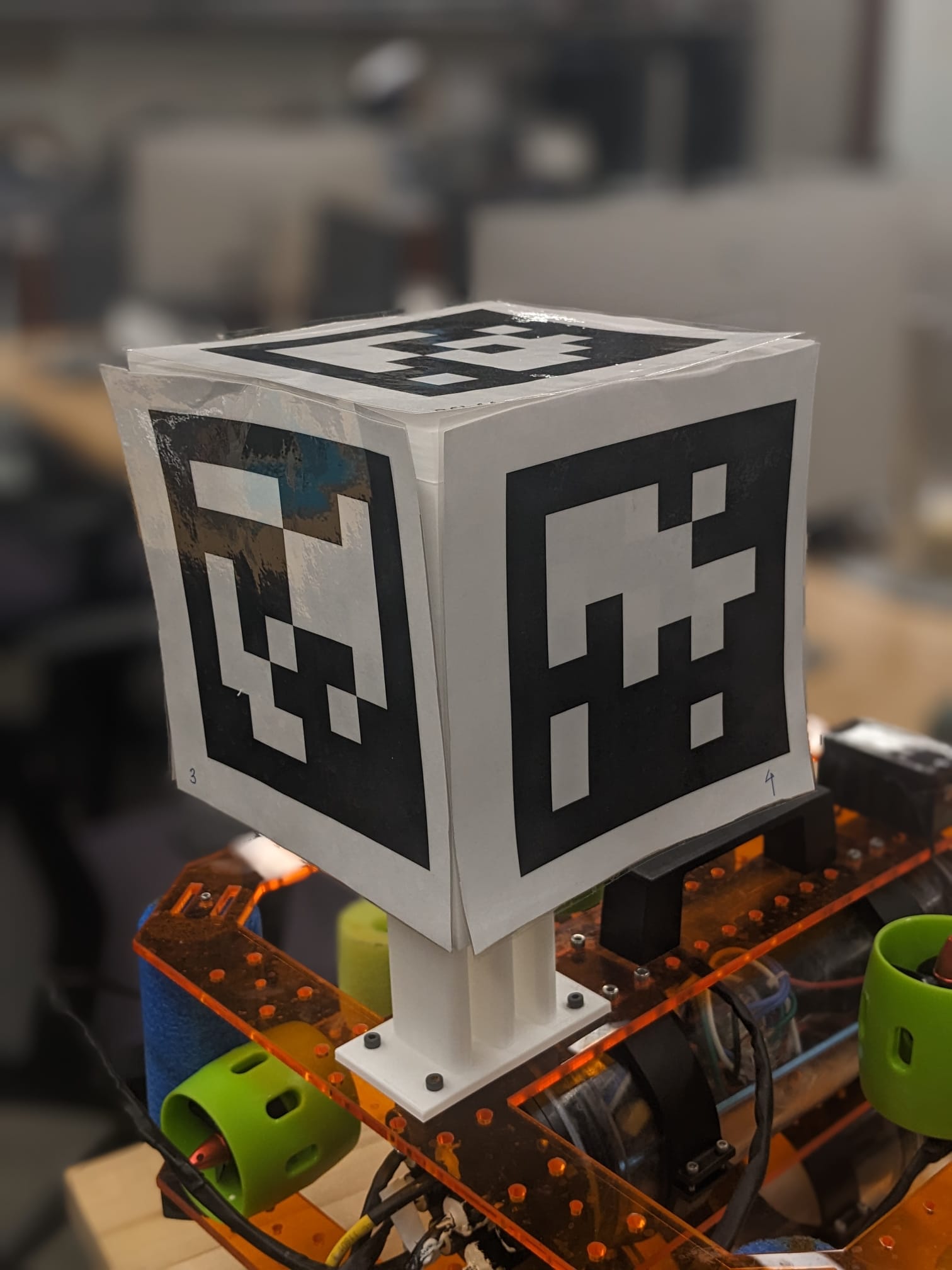

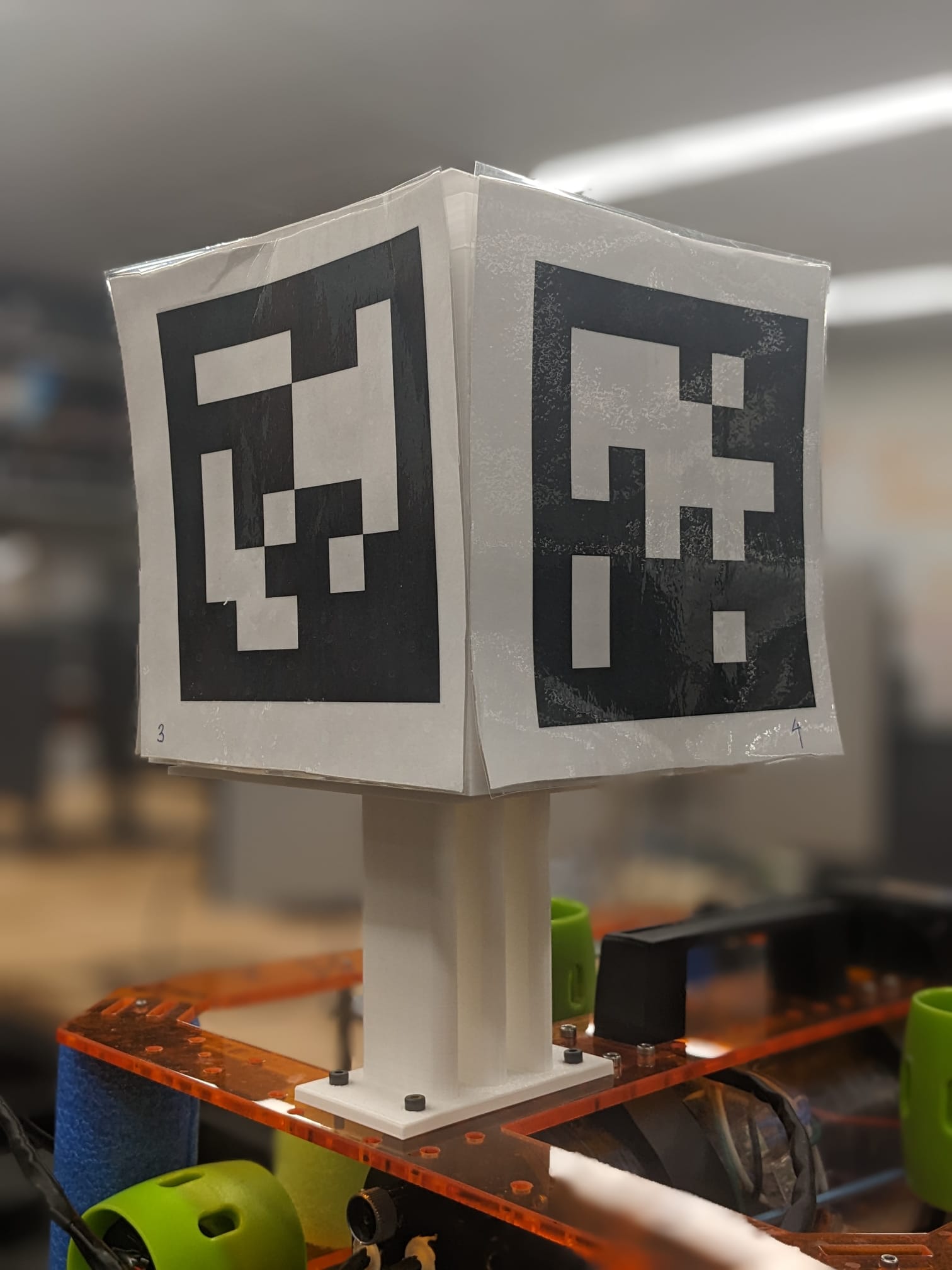

The second part of the perception stack is the information extracted from the april tags attached on the Wisker Array system, mounted on the Seal Head, as viewed by the Monocular low light Blue Robotics Camera. This is submerged in water, and are prone to issues caused by water reflections, refrative index changes, water ripples, varring lighting conditions, etc. Upon multiple iterations of hardware, camera calliberation, camera parameters and detection techniques, we finaly found the optimal setup for reliable pose detection of the wisker ends.

Before in land

After in water

Wisker Perception

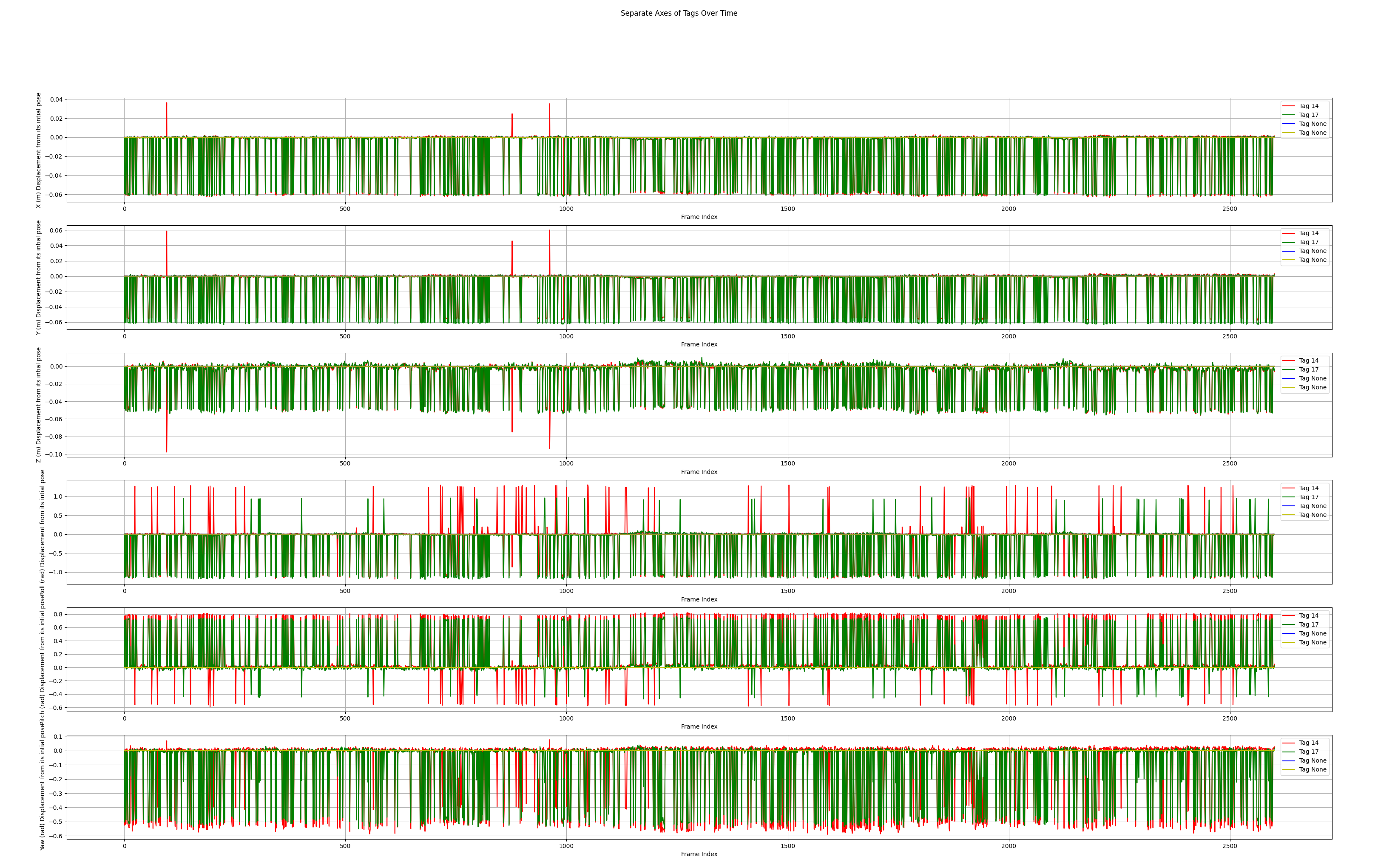

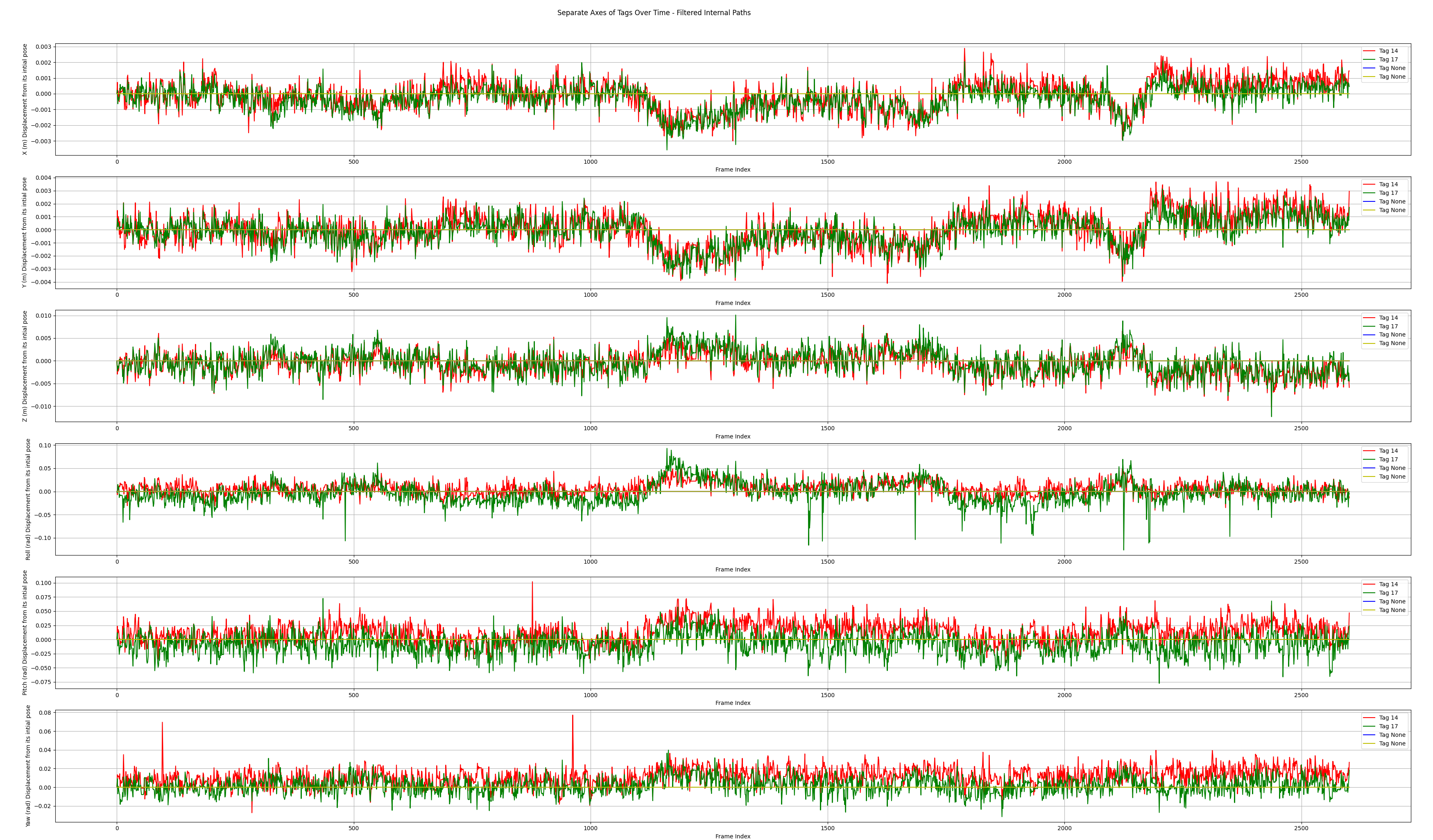

The raw data is processed and filtered to get interpretable signals.

Raw Signal

Filered Signal

Data Stream Syncing

Motion Stack

Mechnical Design

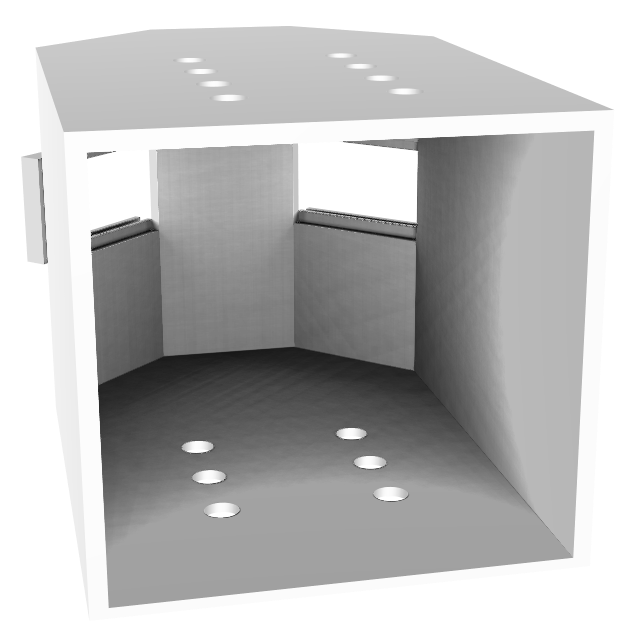

Seal Head

V1 -> V2 Improvements:

- The tag slots are inclined towards the ROV cam, giving it a much better viewing angle.

- Increased length to ensure the camera captures focused images.

- Thread inserts for better screwing mechanism.

- Screwing mechanism from the top, as the lower section is inaccessible most of the time.

- Covered top layer, improves support to the complete head and prevents external lighting and reflection issues.

- Removed mirrors, as tilting ensures the work now, and eliminates inversion step for detection.

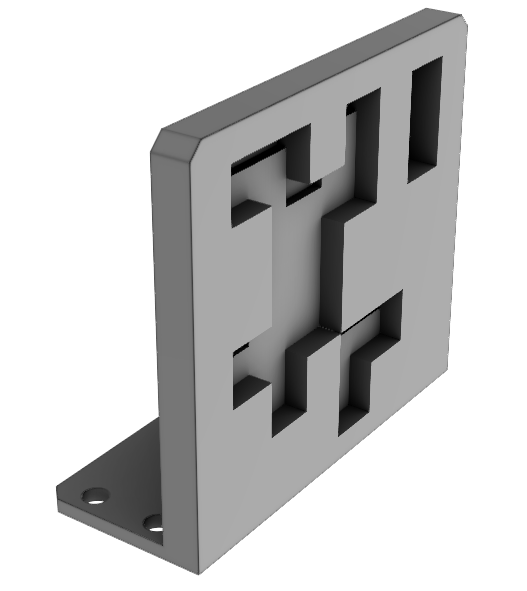

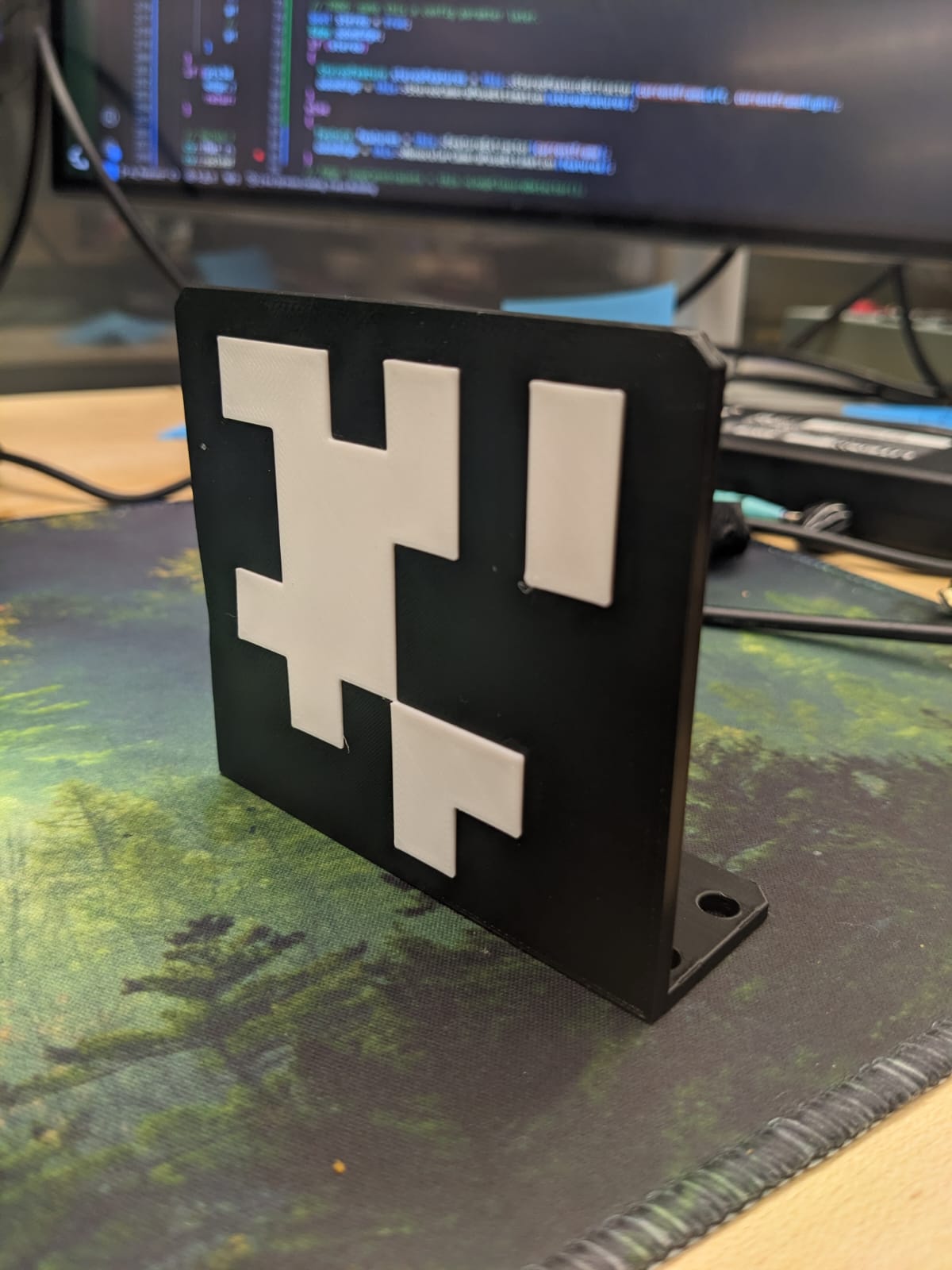

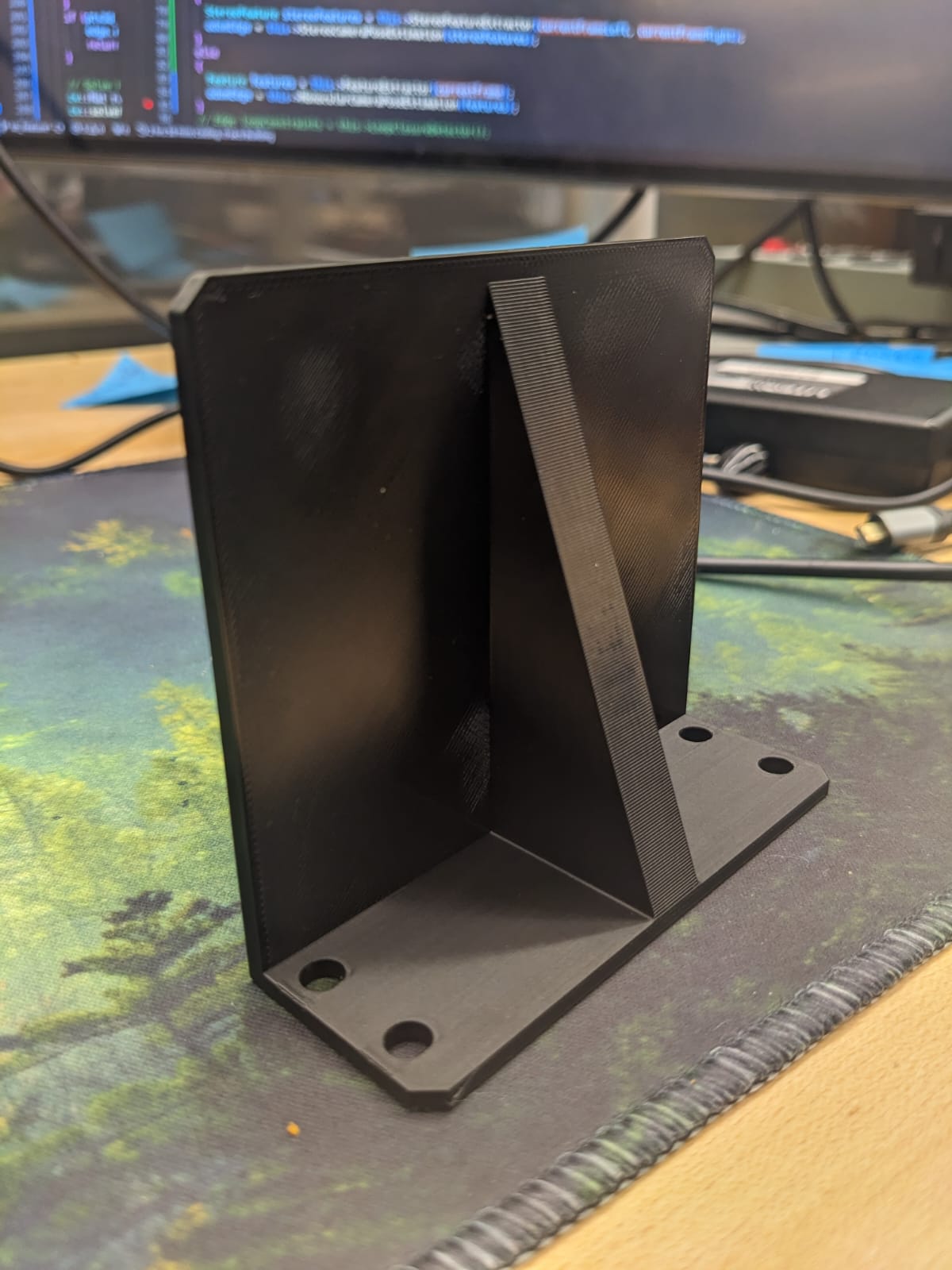

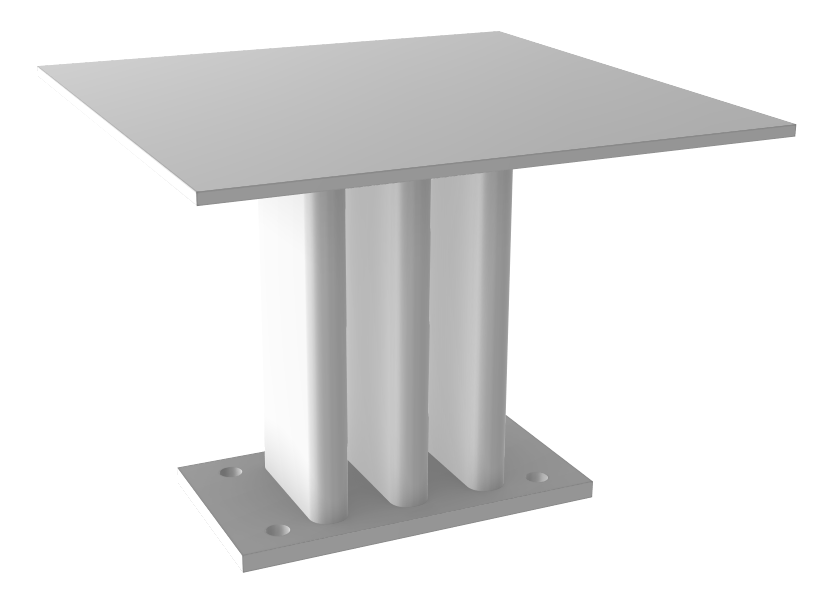

External April Tag Mount

Decision-Model

Point of Interests are detected using singal processing tecchniques and which correlated to physical motionn of the ROV, giving us the sign that wiskers can be used as the sole perception sensor in a sensor.

Future Possibilities

- To develop a causal model [rolling average based wavelet translation and POI detection], that can be implemented on ROV real-time.

- To extend the perception model to interpret velocity, acceleration and direction of motion. And finally extending to extract the movement of an external neighbouring obejct (Prey).

- Develop closed loop control, where the decision model gives input to the motion model. And that motion causes the next state leading to perception sensory data.

Awknowledgements

I would like to thank Prof. Matthew Elwin, Dr. Mitra Hartmann and Kevin Kleczka for their advice and guidance throughout the project. And Ishani Narwankar and James Oubre for their work building the Rover from scratch.